概念

ELK分别代表Elasticsearch、Logstash、Kibana

Elasticsearch是个开源分布式搜索引擎,提供搜集、分析、存储数据三大功能。它的特点有:分布式,零配置,自动发现,索引自动分片,索引副本机制,restful风格接口,多数据源,自动搜索负载等。

Logstash 主要是用来日志的搜集、分析、过滤日志的工具,支持大量的数据获取方式。一般工作方式为c/s架构,client端安装在需要收集日志的主机上,server端负责将收到的各节点日志进行过滤、修改等操作在一并发往elasticsearch上去。

Kibana 是一个开源和免费的工具,Kibana可以为 Logstash 和 ElasticSearch 提供的日志分析友好的 Web 界面,可以帮助汇总、分析和搜索重要数据日志。

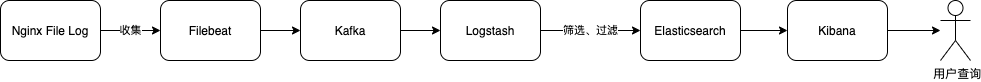

用ELK可以满足日志收集的需求,但是出于性能和效率的考虑,配合Filebeat和Kafka一起使用效果会更好一些。

Filebeat是一种日志数据采集器,基于Go语言,配置文件简单,占用很少的系统资源,Filebeat比Logstash更加轻量级,所以仅仅作为日志数据采集的工具,Filebeat是很好的选择。但它在数据的过滤筛选方面没有Logstash那么强大, 在将数据推到Elasticsearch中时如果希望对数据进行一些筛选过滤的处理,这时就需要配合Logstash一起使用。不过Logstash在短时间内处理大数据量的情况下资源消耗会很大,性能会下降,所以一般的做法会在Filebeat和Logstash中间加上一层Kafka消息队列来配合使用,起到一个缓冲和解耦的作用。Filebeat将收集到的日志数据推入到Kafka中,Logstash来进行消费,两边互不干预和影响。

下图为日志收集的流程图

环境说明

Elasticsearch、Logstash、Kibana、Kafka我并没有在服务器上进行源码搭建,而是采用的阿里云上现有的产品,当然它的产品是需要付费的,Kafka购买一个月如果是新会员有很大的优惠,其他的几款是因为有一个月的免费期,所以抓紧时间体验一把。

如果是自己搭建的环境,就是多花费一些时间,搭建好后,整体的使用流程都是一样的。

实战

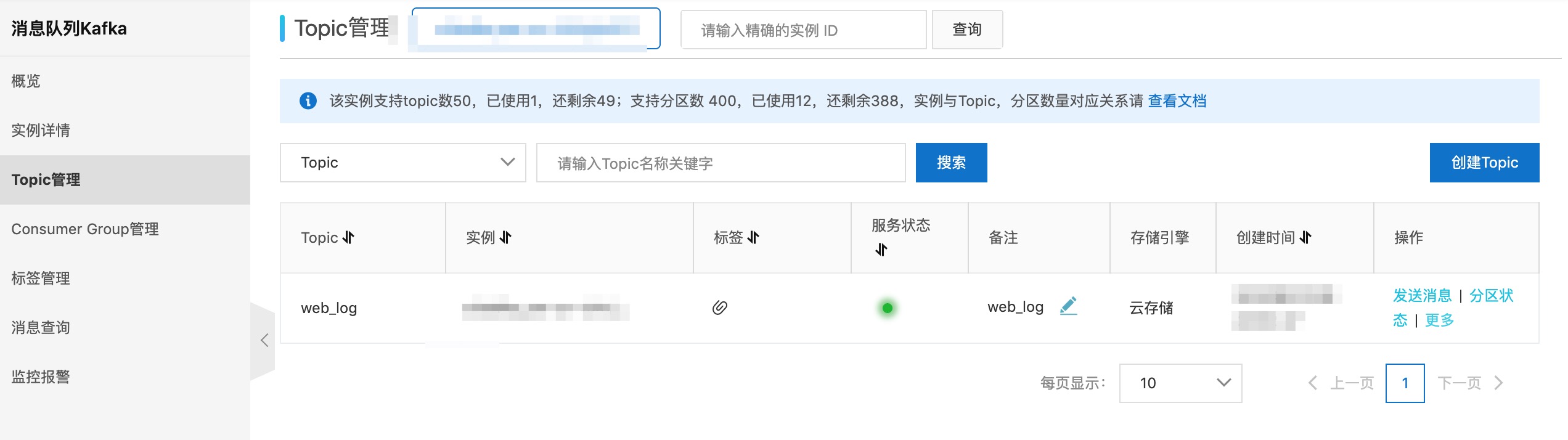

Kafka

Kafka的环境我直接使用的阿里云的产品,也可以自行进行搭建,自己搭建的话需要安装Kafka和Zookeeper

Filebeat

1、下载(Download Filebeat)

1 | tar zxf filebeat-7.6.0-linux-x86_64.tar.gz |

2、修改配置(filebeat.yml)

配置文件中主要是配置input和output的内容,input代表要收集的数据是从哪来的(Nginx Log),output代表收集的数据要传到哪里去(Kafka)。

打开配置文件(filebeat.yml)后发现并没有Kafka相关的配置项,但在filebeat.reference.yml这个文件中有,所有先执行以下命令,将Kafka的配置项拷贝到filebeat.yml中

1 | sed -n '1847,2000p' filebeat.reference.yml >> filebeat.yml |

配置文件具体配置如下:

1 | ###################### Filebeat Configuration Example ######################### |

注:

- Kafka output配置段中的hosts字段按真实环境中的填写

- 注释: “output.elasticsearch”,否则在启用时会报错(Exiting: error unpacking config data: more than one namespace configured accessing ‘output’ ….)

3、启动

(1)调试模式启动

1 | ./filebeat -e -c filebeat.yml |

(2)后台守护进程启动

1 | nohup ./filebeat -e -c filebeat.yml & |

启动后用ps命令查看一下,如果进程存在,则证明启动成功

1 | [root@kai www]# ps aux | grep filebeat |

4、验证

需验证:数据采集是否成功

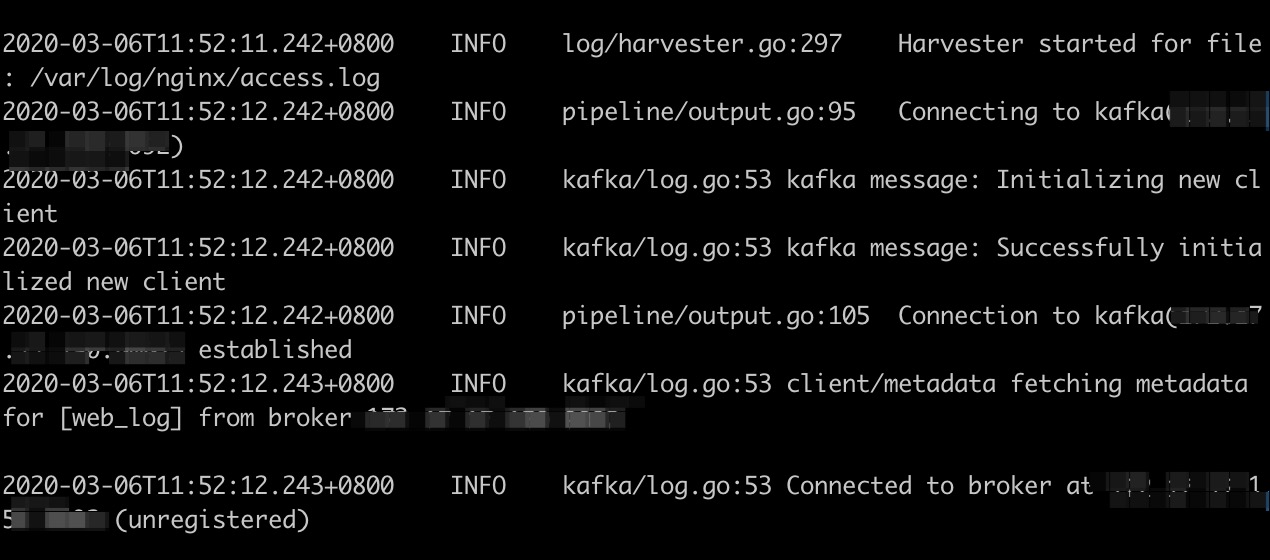

Filebeat启动方式:调试模式

目标:采集Nginx的访问日志,并推到指定的Kafka中

(1)访问网站页面,使得Nginx中有访问日志

(2)在调试模式下观察终端的输出数据,有以下输出说明Filebeat正在采集日志数据并往Kafka进行推送

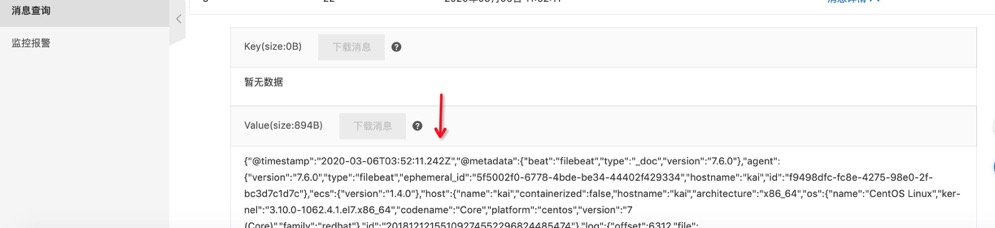

(3)查看Kafka中的消息推入情况

可以看到推送的消息,证明Filebeat的这一环已经ok了

Logstash

配置文件内容如下:

1 | input { |

Elasticsearch

es的自动创建索引默认是关闭的,在测试环境下可以选择自动创建,但线上建议是手动创建索引,我在测试过程中选择的是自动创建。

可以在配置文件中开启自动创建索引的选项,语句如下:

1 | action.auto_create_index: true |

Kibana

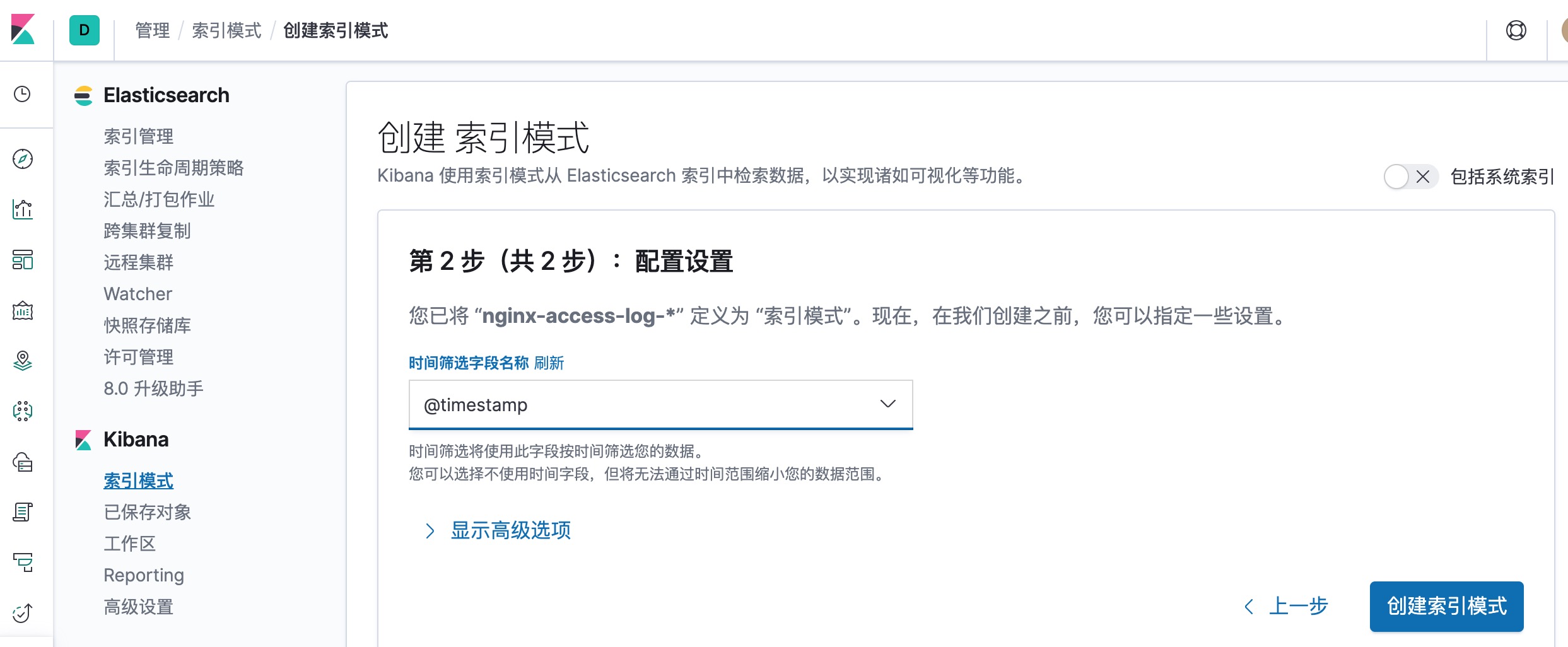

定义索引模式

查看日志数据

在Kibana的页面中可以看到网页的访问日志,到此整个日志收集的流程走完。